Blogs

Navigating Data Gravity Through Edge-Cloud Integration

Summary: This blog explains data gravity and how growing AI and IoT-driven data volumes impact data center operations. It explores edge-cloud integration, infrastructure optimization, and Mitsubishi Electric solutions that help reduce latency, control energy usage, and build resilient, future-ready data centers.

Driven by artificial intelligence (AI), the Internet of Things (IoT), and global operations, data volumes are growing faster than organizations can manage. This rapid growth has led to a phenomenon called data gravity. Data gravity refers to the scenario where massive data sets attract applications, services, and additional data, making its movement and management increasingly complex. To stay competitive, organizations need to rethink how they process, store, and optimize data across edge and cloud environments. Mitsubishi Electric offers edge-to-cloud integration solutions to help organizations overcome these challenges and optimize data center energy usage.

What is Data Gravity?

The concept of data gravity, first introduced by Dave McCroy in 2010, compares the way data attracts related elements such as apps, services, and additional data to the way physical gravity pulls objects toward Earth. As organizations accumulate data, they attract even more, making it harder to move or copy. With the rise of artificial intelligence and the rapid growth of IoT, unprecedented amounts of data are flowing into data lakes, intensifying data gravity. As a result, organizations with large data stores find that their infrastructure, business processes, and analytics cluster around the data source itself.

Why Data Gravity Matters for Data Center Operations

Data gravity poses significant challenges for data centers tasked with transferring data from one location to another. As data continues to pool in one place, it becomes increasingly difficult to move large data sets across regions or clouds. Moving data repeatedly is costly and inefficient. It leads to higher bandwidth costs, increased data center energy consumption, application slowdowns, and frustrated customers who feel locked in because moving their data is too complex and expensive. Data regulations and sovereignty laws restricting where data can reside add further complications. Meanwhile, competition for available power, renewable energy resources, and hybrid grid capabilities puts even more pressure on data center operators. Although data gravity can attract additional data and clients, the difficulty in moving data creates serious operational headaches.

Market Shifts Toward More Resilient Infrastructure

As data gravity intensifies, organizations are adopting strategies to minimize data movement and improve efficiency. Data center operators are increasingly using edge computing solutions to process data near its source, reducing latency, network costs, and data center energy usage. Multi-cloud architectures help prevent data silos by distributing data across multiple platforms. A diversified storage approach combining edge locations for processing, colocation facilities for scalable compute, and on-premises environments for sensitive data supports scalability for future growth. Together, these strategies create a resilient infrastructure that helps data centers mitigate the challenges of data gravity.

Software and Hardware Optimization to Avoid Data Gravity

Beyond storage and edge-cloud integration, strategic software and hardware optimization is essential to managing data gravity effectively. Key software tools for more intelligent data management include deduplication, compression, and storage tiering. These reduce data storage usage and make it easier to manage and move data. On the hardware side, SSDs (solid state drives), low-latency memory, and advanced NICs (network interface cards) help eliminate bottlenecks and balance data loads. Data center systems should be carefully configured to optimize performance, streamline data flow, and minimize data gravity challenges. These optimizations not only improve operational efficiency and energy usage but also increase client satisfaction.

Mitsubishi Electric’s Approach to Data Gravity

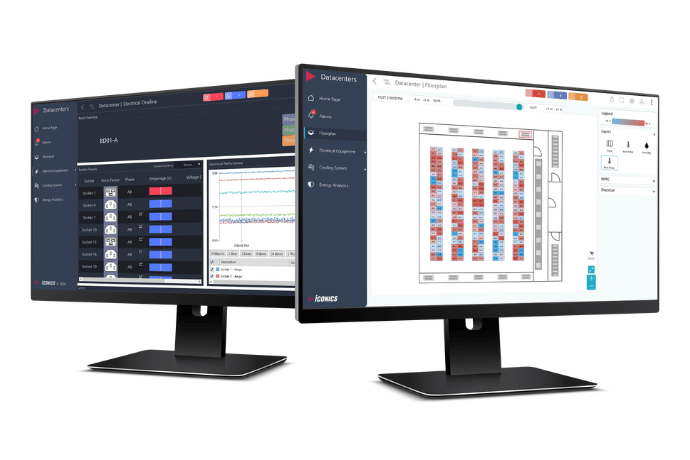

Mitsubishi Electric delivers innovative solutions to help data centers overcome data gravity challenges. Our technologies optimize responsiveness and help operators manage data more strategically across edge, cloud, and on-premises environments, reducing data movement and latency. A solution for mitigating the adverse effects of data gravity is GENESIS.

GENESIS

A single platform for monitoring and optimizing edge sites and cloud-connected facilities across full-scale data centers, GENESIS provides real-time visibility into equipment performance, cooling, and power, while supporting intelligent decisions about where data should be stored. It also integrates with new systems, enabling easy oversight of hybrid infrastructures. Thanks to GENESIS, data center operators can strategically process data at the edge, reduce unnecessary data transfers, and maintain a more balanced data ecosystem.

Building a Future-Ready Data Center

As AI and IoT continue to generate enormous data volumes, mitigating data gravity is essential. By combining edge-cloud integration and optimized hardware/software, data center operators can lower latency, improve energy efficiency, and ensure scalable, resilient infrastructure. With Mitsubishi Electric’s GENESIS, you can build a future-ready data center that remains agile and efficient as data volumes grow.

-

Inquiries

-

Select

& Quote -

Share

-

Partners